In today’s AI-driven economy, firms without the right systems risk falling behind. Advanced tools demand much more than standard IT; they need specialised environments built for speed, scale, and security. This foundation supports everything from automation to analytics, enabling real-time decisions and long-term competitive advantage.

Nowhere is this more urgent than in the legal sector, where firms embracing AI can streamline document handling, enhance compliance, and deliver faster outcomes. But plugging AI into outdated systems won’t work. AI infrastructure isn’t just support; it’s the foundation for real transformation in how legal services are delivered.

Defining AI Infrastructure: How It Compares to Traditional IT

AI infrastructure is a purpose-built tech stack that includes specialised hardware, high-speed storage, powerful networks, and orchestration software. It’s designed to support compute-intensive tasks, such as machine learning, deep learning, and real-time data inference, at scale.

Traditional IT infrastructure, by contrast, supports general business tools like email, CRMs, and file storage. It uses slower CPUs and simpler networks, making it unsuitable for AI’s high-throughput, model-driven workloads.

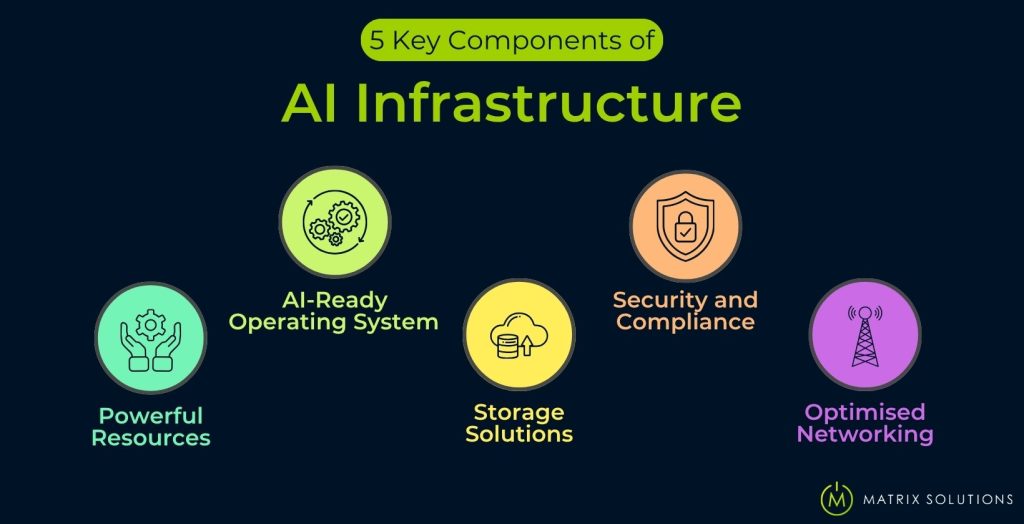

What are the Core Components of an AI Infrastructure?

Building a high-performance AI infrastructure requires more than just raw computing power – it demands a tightly integrated stack of hardware, software, and networking that can support complex, data-intensive workloads at scale.

Traditional IT lacks this coordination. AI infrastructure components include compute, storage, networking, and orchestration, which must work in sync. Each layer plays a critical role in training, deployment, and scaling. Without alignment, even advanced AI tools underperform.

In the following sections, we will break down the essential components, including hardware, software, and networking, and explain how each one supports scalable, real-time AI performance.

What Hardware Powers High-Performance AI Infrastructure?

High-performance workloads demand specialised hardware for AI, far beyond what traditional IT can deliver. Each component must support high-speed processing, large datasets, and real-time inference without bottlenecks.

- GPUs (Graphics Processing Units)

- Enable fast, parallel computations

- Essential for model training and inference

- Common models: NVIDIA A100, H100

- High-Performance Servers

- Support multi-GPU configurations

- Handle large-scale training pipelines

- Built for high-throughput compute loads

- AI-Optimised Storage

- Uses NVMe, SSDs, or object storage

- Delivers low-latency data access

- Prevents I/O bottlenecks in real-time tasks

What Software and Platforms Enable AI Workflows?

To enable AI workflows end-to-end, organisations rely on specialised software and platforms designed to support each stage of the AI lifecycle. These tools typically fall into three core categories:

1. AI Frameworks

Frameworks like TensorFlow, PyTorch, and MXNet power model development. These tools provide the building blocks for training deep learning, NLP, and image recognition models with high accuracy and scalability.

2. Orchestration Tools

Kubernetes, Docker, and Kubeflow manage the deployment and scaling of AI models across cloud and hybrid environments. They ensure consistency, automate workflows, and enable repeatable, secure model operations.

3. Data Management Platforms

Solutions such as Snowflake, Databricks, and Apache Airflow enable secure data ingestion, transformation, and pipeline control. These platforms ensure AI models have fast, structured access to relevant, clean data at scale.

How Does Networking Enable AI Data Flow?

AI workflows generate massive data movement between compute, storage, and endpoints. Standard office networks can’t handle this load, causing bottlenecks that delay training and reduce model accuracy. AI networking requirements include 10-100 Gbps interconnects (e.g., InfiniBand) to support high-throughput pipelines and ensure smooth, real-time performance across distributed systems.

How Does AI Infrastructure Work in Practice?

Let’s say a legal firm wants to reduce client churn. From CRM ingestion to real-time alerts, every phase, from compute to storage to data management, must work in sync. This is where infrastructure turns AI from concept to action.

Ingesting and Preparing Enterprise Data

AI infrastructure securely connects to CRMs, ERPs, and DMS platforms to ingest raw business data. Through AI data preparation, this data is cleaned, labelled, and transformed into structured formats using tools like Databricks and Apache NiFi, ensuring that models learn from accurate, high-quality inputs.

Training AI Models Using Compute Infrastructure

After preparation, data is fed into GPUs for training AI models. These high-performance processors run iterative cycles for hours or days to identify patterns and optimise accuracy. Traditional IT hardware lacks the parallel processing power to handle this workload effectively.

Managing and Storing Models and Data

AI infrastructure management must strike a balance between speed and security. Trained models require fast-access storage to support real-time inference and encrypted environments to protect them as intellectual property. Secure repositories with version control and access logs help prevent theft, tampering, or data loss.

Deploying AI for Business Outcomes

Once validated, models are deployed into production using CI/CD tools like Kubeflow. In live environments, they generate real-time predictions, such as flagging at-risk clients, optimising resource allocation, or automating document workflows.

Providing Ongoing Infrastructure Support

AI is not a ‘set and forget’ system. Without continuous model monitoring, models drift, lose accuracy, and create risk. Retraining and maintenance are essential as business data evolves. This is where Matrix Solutions stands apart, offering long-term support that generic IT providers rarely match.

What Security and Compliance Challenges Come With AI Infrastructure?

AI infrastructure brings unique enterprise-level threats, including data breaches, IP theft, and compromised model integrity. These AI security risks are particularly critical in sectors such as law and finance, where compliance and trust are of paramount importance.

The following sections break down the legal and technical challenges firms must address.

Data Privacy and the Australian Privacy Act

AI infrastructure must comply with the Australian Privacy Act. In regulated sectors like law, finance, and healthcare, these rules are non-negotiable. AI data privacy standards in Australia also vary by infrastructure, with cloud vs. local hosting having a significant impact on compliance risk.

Protecting Proprietary Algorithms and IP

AI models, training data, and algorithms are valuable business IP. Protect this IP with encrypted storage, role-based access, and secure hosting. These controls prevent reverse-engineering and theft. In high-risk sectors, poor infrastructure opens the door to competitor espionage and insider misuse.

Ensuring Data and Model Integrity

Threats like data poisoning, adversarial attacks, and corrupted inputs can distort AI outcomes. Artificial Intelligence data integrity relies on infrastructure that incorporates validation layers, version control, and monitoring. In sectors such as finance and logistics, compromised predictions can lead to fraud, loss, or operational failure, making integrity safeguards crucial.

Why AI Infrastructure Is a Strategic Priority for Australian Businesses

An effective AI infrastructure solution isn’t just about reducing risk; it’s a strategic asset. Australian businesses are reporting an average revenue benefit of $361,315 from expanding their AI solutions while reducing business costs. And for SMEs, it unlocks speed, automation, and smarter decisions, giving them a real edge in a digitally competitive market.

Achieve Superior Cybersecurity & Compliance

AI infrastructure meets security compliance standards such as ISO 27001, SOC 2, and local data residency laws. Integrated safeguards, including encryption, access controls, and audit logging, protect both operational data and AI models. This ensures regulatory trust, reduces legal risk, and reinforces confidence among clients and stakeholders.

Drive Real-World Operational Efficiency and Cost Reduction

Operations fundamentals enable automation across document processing, customer service, and contract review. By leveraging GPU clusters and optimised data pipelines, firms reduce manual effort, accelerate outcomes, and lower cloud training costs, delivering faster and more efficient operations at scale.

Simplify Scalability and Future Growth

Matrix builds modular infrastructure, hybrid, cloud-native, or on-premises, so clients can scale AI workloads as they adopt NLP, computer vision, or real-time inference without rebuilding from scratch. This flexibility supports rapid innovation and long-term competitiveness without costly rebuilds.

Improve Real-Time Decision Making with AI Insights

Low-latency infrastructure enables real-time analysis of AI data, from fraud detection to dynamic pricing. With edge AI and streaming pipelines, businesses shift from reactive to proactive decisions. Integration with BI tools delivers instant insights, helping leaders prioritise risks, allocate resources, and act with confidence.

How to Choose the Right AI Infrastructure Model for Your Business

Choosing the right model within your AI infrastructure landscape is a strategic decision that affects cost, control, compliance, and growth. The next sections compare deployment types, build vs. buy options, and vendor criteria to help you select the right fit for your business.

On-Premises vs. Cloud vs. Hybrid Infrastructure Models

Choosing between on-premises, cloud, and hybrid models is critical to aligning infrastructure with business goals. The diagram below compares control, scalability, security, and cost across each option.

Feature | On-Premises | Cloud | Hybrid |

Control | Full control over all systems | Limited, managed by the provider | Balanced, customisable where needed |

Scalability | Slower and CapEx-heavy | Instant, elastic scaling | Flexible and demand-driven |

Security | High (if configured properly) | Varies, depends on provider | High with tailored policies |

Cost | High upfront (CapEx) | Lower upfront, higher OPEX | Moderate OPEX plus some CapEx |

Should You Build or Use Managed AI Infrastructure?

Build (DIY):

Building your own AI infrastructure poses a high risk for SMEs. It requires sourcing rare GPUs, managing complex system integration, and hiring niche (often costly) talent. For most non-specialists, this leads to cost overruns and deployment delays, common pitfalls among DIY infrastructure companies.

Buy/Managed (The Smart Choice):

Managed AI infrastructure offers faster deployment, lower risk, and predictable costs. Matrix provides SMEs with enterprise-grade systems, expert support, and scalable design, without the need for in-house AI specialists. It’s the smarter path to operational value and long-term success.

What to Look For in an AI Infrastructure Provider?

Use this checklist to evaluate any artificial intelligence infrastructure provider, and see why many firms choose Matrix Solutions:

☐ Demonstrable AI Expertise: Do they support the full AI lifecycle, not just servers? (Matrix Solutions does.)

☐ Australian-Based & Sovereign: Is your data stored locally and managed by a local team? (Matrix Solutions is 100% Australian.)

☐ Security & Compliance Focus: Do they align with the Australian Privacy Act and industry-specific rules?

☐ Strategic Partnership Approach: Will they help shape your long-term AI roadmap, or will they just supply infrastructure?

☐ Flexible & Scalable Models: Can they grow with your business across hybrid, private, or cloud setups?

Frequently Asked Questions About AI Infrastructure

How much does AI Infrastructure cost?

AI Infrastructure cost depends on the model type. On-premises requires high CapEx, while managed services offer predictable OPEX. Costs vary with scale, but SMEs can access AI-ready systems from a few thousand AUD monthly.

Can SMEs adopt AI Infrastructure without large upfront investment?

Yes, SMEs can adopt AI Infrastructure through managed service models. These reduce upfront investment by offering subscription-based pricing, allowing access to enterprise-grade AI infrastructure without the capital burden of building it internally.

What's the main difference between AI Infrastructure and traditional IT Infrastructure?

The main difference is purpose. AI Infrastructure supports high-performance workloads, such as model training and inference, while traditional IT focuses on general productivity tasks, including email, CRM systems, and file sharing, using basic CPU-based systems.

How does AI Infrastructure support hybrid or private cloud environments?

AI Infrastructure supports hybrid and private clouds by using a modular architecture. This enables organisations to control data residency, security, and performance while scaling AI workloads across both on-prem and cloud platforms as needed.

Why is cybersecurity critical in AI Infrastructure?

Cybersecurity is critical in AI Infrastructure because models handle sensitive data and decision logic. Attacks can poison training, steal IP, or corrupt outputs. Secure infrastructure protects data integrity, privacy, and long-term AI reliability.

Is AI Infrastructure secure for sensitive client data?

Yes, AI Infrastructure is secure for sensitive client data when built with encryption, role-based access, and local data residency. These controls align with privacy laws and reduce risk in legal, financial, and healthcare sectors.

How long does it take to deploy AI Infrastructure?

Deployment time depends on model type. Managed cloud-based AI Infrastructure can go live in weeks. On-premises setups may take months due to hardware sourcing, integration, and compliance alignment.

Why Choose Matrix Solutions for Your AI Infrastructure Needs?

Firms in the legal, financial, and professional services sectors can’t afford generic IT solutions. They need secure, scalable solutions tailored to their regulatory environment. With over 27 years of industry experience, Matrix Solutions delivers expert-managed AI infrastructure cloud solutions designed for compliance, speed, and growth.

Why Matrix Solutions?

- Legal & AI Expertise: Deep knowledge of legal systems, governance, and workflows

- 100% Australian-Based: Local hosting with full data sovereignty

- Privacy-First Infrastructure: Aligned with the Australian Privacy Act

- Cloud-Ready & Scalable: Modular design for AI growth and performance

Comprehensive IT Solutions

Ready to build your AI foundation with confidence?

Build your foundation in AI with expert-managed AI infrastructure cloud solutions with Matrix Solutions.

Book Your Free Consultation